Part 5 – Filtering in Digital Audio

Most DACs will have some information in their specifications about the types of filtering they use. As these filters are an incredibly important part of the product, it is worthwhile explaining why and how they are used.

To understand why we need a filter, it helps to start at the beginning, when an analogue signal enters an ADC during the recording / production process. (This is significant, as the filter within an ADC has almost as much impact on what we hear during playback as the filter within a DAC.)

We have previously discussed how audio is sampled using an ADC – the analogue voltage is converted into a digital representation, with a series of ‘samples’ being taken to form this representation. The lowest sample rate used in audio is typically 44,100 samples per second (S/s). The reason for using this sample rate (44.1kS/s) is largely due to the Nyquist Theorem. This states that the sample frequency of digital audio needs to be at least twice the highest frequency in the audio being sampled. The highest frequency which can be sampled (half of the sample rate) is defined as the ‘Nyquist frequency’. As the human range of hearing extends up to 20,000Hz, accurately sampling this frequency range requires a sample rate of at least 40,000S/s.

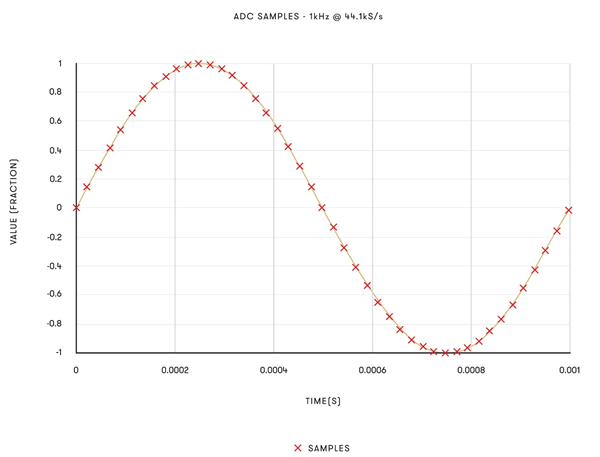

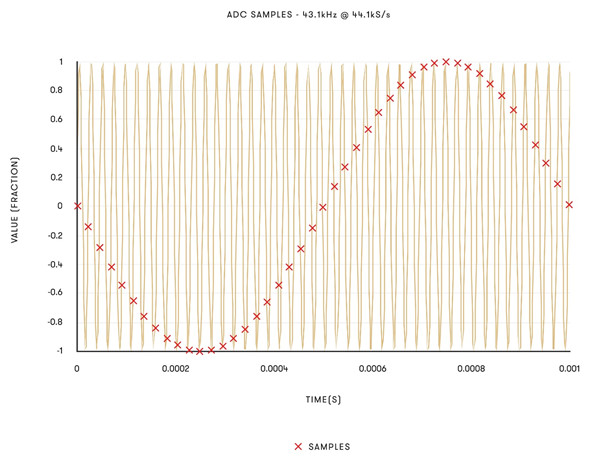

However, what happens if what we are sampling doesn’t ‘fit’ into our sample rate’s valid range, between 0Hz and the Nyquist frequency? If this occurs, then the frequency components above the Nyquist frequency are ‘aliased’ down below it. This sounds counterintuitive, but it is illustrated here:

The above graphs show two signals: one at 1kHz and one at 43.1kHz, both sampled at 44,100 samples per second (44.1kS/s). Note that sampling the 43.1kHz signal produces samples which are indistinguishable from the 1kHz tone (though phase inverted). If this 43.1kHz signal was passed through the ADC, the resultant samples would be indistinguishable from those of the 1kHz tone – and a 1kHz tone would be heard on playback. This means that the ADC must remove anything which does not ‘fit’ between 0Hz and Nyquist frequency, to avoid these aliased images affecting the audio.

The removal of anything which does not fit between 0Hz and Nyquist frequency is carried out by way of a low-pass filter. This filter removes any content above a certain frequency, and allows anything below that frequency to pass through, ideally unchanged. This filter can be implemented in either the digital or analogue realm.

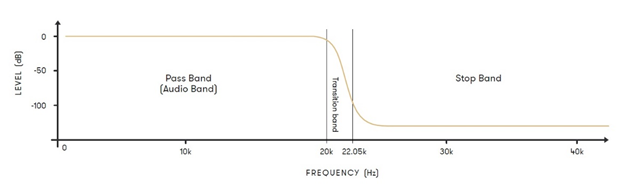

It would seem that the most obvious solution to the aliasing problem caused by running an ADC at a sample rate of 44.1kHz is to implement a filter which does nothing at 20,000Hz, but cuts everything above 20,001Hz. This would allow the removal of any unwanted alias images from the A/D conversion, while ensuring the audio band remains unaffected. However, such a filter is highly inadvisable. For one thing, if using a digital filter, the computing power required to run such a filter would be excessive. Filters work by reducing the amplitude of the signal above a frequency on a slope so to speak, measured in decibels per octave. As such, the audio is sampled at a higher rate than simply double the highest frequency we are trying to record (it is actually sampled at 44,100Hz instead of at 40,000Hz) which allows some room to filter it. This means the filter can now work between 20,000Hz and 22,050Hz without aliasing becoming an issue, while also leaving the audio frequencies humans can hear unaffected.

This diagram illustrates a low-pass filter for 44.1kHz audio.

This is still an extremely narrow ‘transition band’ to play with. If this is done with an analogue filter, the filter will have to be very steep – this is problematic as analogue filters aren’t phase linear (the filter will delay certain frequencies more than others causing audible issues) and are pretty much guaranteed to not be identical. This is okay when they are working at say, 100kHz, but at 20kHz this becomes very problematic. As such, the filter used to remove any content from the Nyquist frequency and up is implemented in the digital domain, in DSP (Digital Signal Processing).

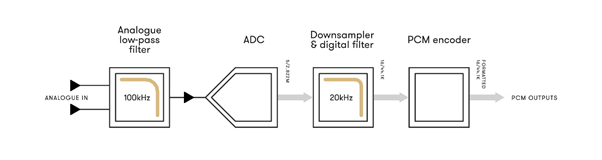

In audio recording, it is common practice to use a high sample rate ADC and perform the filtering at the Nyquist frequency on the digital data instead. This method is known as an ‘Oversampling ADC’. The block diagram for a dCS oversampling ADC producing 16-bit 44.1k data is shown here:

The analogue low-pass filter removes high frequencies from the analogue signal above 100kHz, as these would cause aliasing. As previously discussed, this analogue filter acting at 100kHz can be gentle and acts in a region where non-linearities are not as critical.

The ADC stage then converts the signal to high-speed digital data. In a dCS ADC, this stage is a Ring DAC in a feedback loop, so produces 5-bit data sampled at 2,822,000 samples per second.

The Downsampler converts the digital data to 16-bit 44,100 samples per second. This data then passes through a sharp digital filter, which effectively removes content above 22.05kHz. (Frequencies higher than this will cause aliases if not filtered out.) The PCM encoder then formats the data into standard SPDIF, AES/EBU and SDIF-2 serial formats, complete with status and message information.

The digital filter used in the Downsampler will have its own set of trade-offs to employ. To simplify this greatly, digital filters work by passing each sample through a series of multipliers, with these multipliers collectively acting to filter higher frequencies from the signal. The shape of how these multipliers are arranged is referred to as the filter ‘shape’ (symmetrical or ‘half-band’ filters, asymmetrical filters). Different filter shapes have different impacts on the sound.

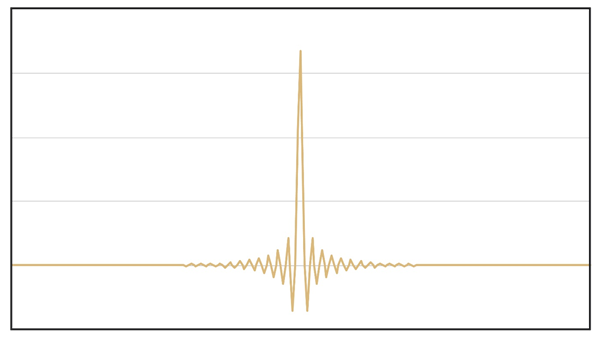

This diagram illustrates an example of the response of a symmetrical digital filter. They are called this as they produce symmetrical ‘ringing’ when driven with an impulse (also known as a transient). This results in an acausal response before the impulse. The effect is more pronounced at lower sample rates:

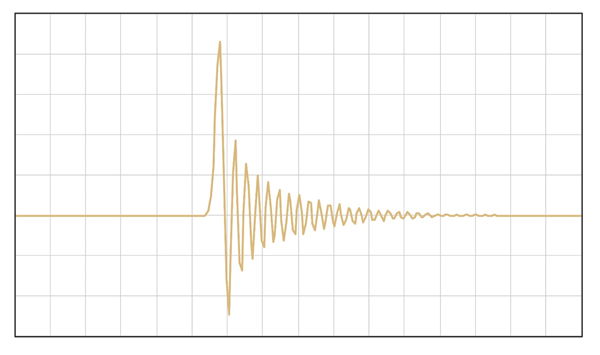

This diagram illustrates an example of an asymmetrical filter response. This filter type has a completely different impulse response – here, there is no ringing before the impulse, but there is more ringing after the impulse when compared to a symmetrical filter:

Given the fact that the ADC must use a filter to remove aliases, and that a digital filter acting at the Nyquist frequency is preferable to using a harsh analogue filter, there will therefore be pre- and/or post-ringing introduced at the recording stage by the digital filtering in the ADC. This is a good trade-off to make, and the filter choice here is important.

Most ADCs will work using a symmetrical filter. What this means is that for any digital recording, there will be (necessary) pre- and post-ringing present on the recording, as a result of the filter which was used. The key point to be made here is that all digital recordings will include ringing from the filters, even before they reach the DAC, but this is the best approach to take – provided the filters are correctly designed and implemented within the ADC.

The other side of this topic is the DAC, where the digital audio recorded by the ADC is translated back to analogue for playback.

When a DAC reproduces an analogue waveform from digital samples, an effect similar to aliasing occurs. This is where, due to the relationship between the frequency of the analogue audio signal and the sample rate of the digital signal, ‘copies’ of the audio spectrum being converted can be observed higher up in the audio spectrum. While these images exist at frequencies outside the range of human hearing, their presence can have a negative impact on sound.

There are two reasons for this. Firstly, frequencies at rates above 20,000Hz can still interact with and have an audible impact on frequencies lower down, in the audible spectrum (between 0-20,000Hz).

Secondly, if these images – known as Nyquist images – are not removed from an audio signal, then the equipment in an audio system may try and reproduce these higher frequencies, which would put additional pressure on that system’s transducers (particularly those responsible for reproducing high frequencies) and amplifiers. Removing Nyquist images means an amplifier has more power available to use for reproducing the parts of an audio signal that we do want to hear, which leads to better performance and a direct positive impact on sound.

Similar to in an ADC, the solution to the problem posed by Nyquist images in D/A conversion is to filter anything above the highest desired frequency of the audio signal by using a low-pass filter. This allows Nyquist images to be eliminated from the audio signal, without impacting the music we want to hear. The question of how a low-pass filter should be designed is a complex and sensitive topic –and it’s important to note that there is no one-size-fits-all solution.

Of course, when working with source material which is at higher sample rates than 44.1kHz, such as hi-res streamed audio, the requirements of the filter in the DAC change. There is a naturally wider transition band, and as such the filter requirements will be different. Most DAC manufacturers offer a single set of filters which are cascaded for different sample rates. Given the different filtering requirements posed by converting different sample rates, this is not the optimal approach to take in a high-end audio system.

For this reason, the filters found within dCS products and the Ring DAC are written specifically for each sample frequency by dCS engineers. Further to this, there are multiple filter choices available for each sample frequency in a dCS product. There is no one right answer to filtering, as it depends on the listener’s preference and the audio being reproduced, so a choice of very high-quality filters bespoke for the Ring DAC and the sample frequency of the audio are available for the user to choose from.

The next post will explore the details of how digital filters are designed for use in audio products, exploring factors such as cut-off frequency, filter length and windowing.