Part 2 - Jitter (intrinsic)

There are two main kinds of jitter: intrinsic and interface. Intrinsic jitter refers to jitter which is produced inside of a product like a DAC, through effects like phase noise on the oscillator. Interface jitter refers to jitter which is picked up by the interface(s) used to transfer the audio and clock signals. This could come either as interference picked up by the cable itself, or through the cable essentially acting as a filter for certain frequencies, impacting the integrity of the square wave (the output of the clock circuitry) passing through it.

There are several varieties of quartz crystal oscillators, but Voltage Controlled Crystal Oscillators (VCXOs) and Oven Controlled Crystal Oscillators (OCXOs) are two of the most common in audio.

Voltage Controlled Oscillators, or VCOs, are also used in audio products, but these operate on a purely electronic basis, and do not use an electromechanical material such as quartz to generate signals.

Quartz oscillators tend to have better phase noise performance than VCOs, meaning the oscillator itself may be less prone to jitter. What this means in terms of overall clock design is that in a DAC with a quartz oscillator, the Phase Locked Loop, or PLL (the circuit which matches the frequency of the DAC’s clock to the clock of the incoming audio signal) can be biased towards rejecting interface jitter by means of a narrower PLL bandwidth.

This is possible as the quartz oscillator itself is less prone to phase noise and subsequently jitter. As such, if jitter should happen to be present on the interface, for example a jittery AES signal, this jitter will not be passed on to the DAC, as it will have come and gone before the PLL reacts to it. The DAC instead relies more on the oscillator for timing accuracy between individual samples which, in the case of a dCS product and its quartz crystal-based clock, is a very high level of accuracy.

The alternative to this would be to use a VCO as the oscillator. However, given the potentially poorer phase noise performance of a VCO compared to a quartz oscillator, the PLL within the product may need to be biased towards rejecting intrinsic jitter, as the oscillator itself would likely be more prone to phase noise, which can be achieved through using a wider bandwidth PLL. This means any interference or cable filtering effects will have a more direct impact on the sound which, in some use cases, may be undesirable.

If this is the case, you might be wondering why any product would use a VCO as a clock source. One benefit of using a VCO over a quartz oscillator is the possibility for the clock to have a greater ‘pull range’, meaning it can lock to a wider range of signals (for example, signals running consistently too fast or too slowly).

In our experience, in the context of a high-end digital audio playback system, the quality of a DAC’s clocking should not be permanently compromised to allow for a sub-optimal source to be connected and work properly. At dCS, we opt for using a quartz-based oscillator with a high level of accuracy and stability, allowing for a pull range of +/- 300 parts per million (PPM), as per AES specification.

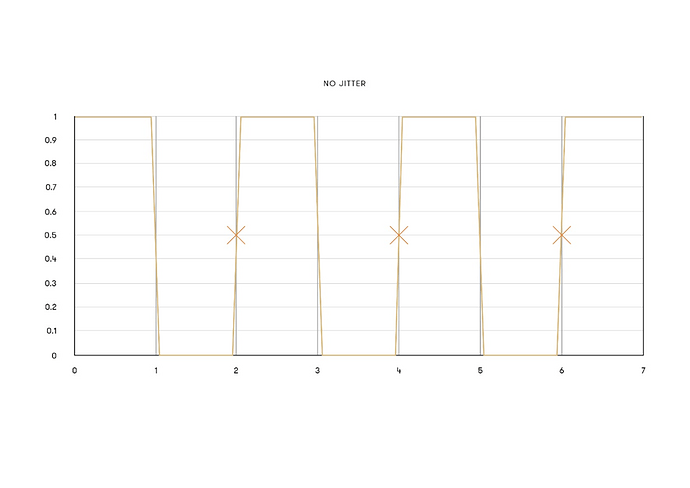

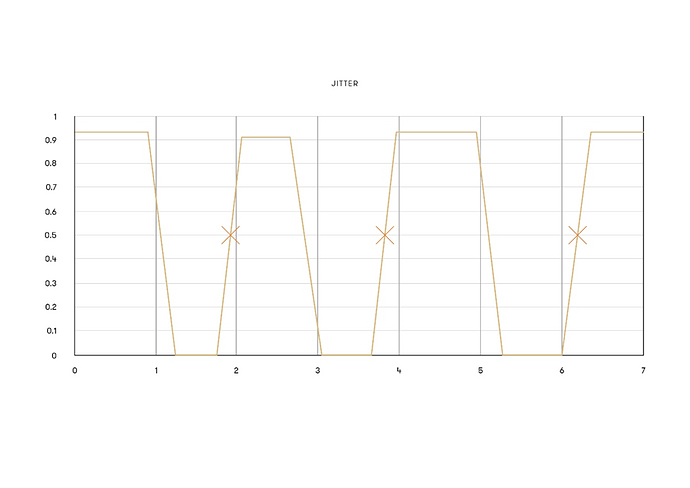

These graphs show an exaggerated example of the effect of jitter on the square wave output by a clock circuit. As previously discussed, jitter has both impacted the transition times of the wave and the peak voltages the wave can reach. This has the effect of changing the point at which the system would perceive, for arguments sake, a 0 changing to a 1. Clock systems watch the ‘rising edge’ of the clock signal where the voltage increases, so the point on the rising edge where the amplitude goes above 0.5 has been marked on the graphs. The timing of this is regular in the first graph – the transition points on the rising edges fall on 2, 4 and 6 on the X axis respectively. When jitter is introduced, the transition points are shifted forwards or backwards depending on the nature of the jitter. It is not regular, it is random.

There are several factors that can cause phase noise (and, consequentially, jitter) on an oscillator, and these should all be taken into account when designing a clock system.

Physical Vibrations

As the basis of a quartz clock is its piezoelectric property (the physical movement of the crystal when a voltage is applied), any external physical vibrations can cause inaccuracy in the clock. The extraneous movement does not need to be vigorous to cause inaccuracy. It could be as subtle as, for example, the vibrations of a CD mechanism inside the product. Any measures which can be taken to isolate the clock circuitry from the external physical vibrations should be taken, as this creates a higher level of clock performance.

Power Supply

The ability of a quartz crystal (or any piezoelectric material) to maintain a consistent oscillation frequency relies on having a stable, interference-free DC signal of the correct specification. In the case of both VCXOs and OCXOs, this means clean DC for the power supply. In the case of a VCXO, even more crucially, the control voltage needs to be stable (in this type of oscillator, the control voltage is used to make fine adjustments to the crystal’s frequency). If there is any variation in the power rails fed to the crystal, the frequency it resonates at will change. In products with a quartz-based clock, designers should always endeavour to have as clean a power supply fed to the crystal(s) as possible, at the correct voltage and frequency for the specification of the region the product is being used in.

Crosstalk

Electronic circuits can generate electromagnetic leakage. This is often seen when running high-rate signals such as digital audio signals through the copper tracks found on PCBs (printed circuit boards). The copper tracks essentially act as antennas, with the digital audio signals being radiated from the board. This interference can have an impact on associated clock circuits if they are in close proximity, negatively impacting the performance of the clock.

The correct way to eliminate this issue is to design the product’s PCBs in such a way that minimises crosstalk. Secondary to this is to ensure that any sensitive parts are separated from those which may cause interference. An even more effective method is to completely remove many potential sources of EM interference from the product, where possible, such as with the use of a Master Clock (a standalone clock with its own dedicated circuitry and power supplies).

Clock Frequency

The ideal way to design the clock inside an audio product is to have two oscillators: one running at direct multiple of 44.1kHz, and the other running at a direct multiple of 48kHz. The reason for this is that almost any sample rates used in digital audio are multiples of these ‘base rates’ (including DSD, which runs at very high multiples of 44.1kHz). If the clock does not use direct multiples of the sample rate it is to be clocking, the maths becomes more complex and the electronics that need to be used to generate the correct frequency are more prone to jitter.

Trying to clock a 44.1kHz signal with a 10MHz clock, for example, would require somehow synthesising 44.1kHz from 10Mhz, which mathematically is not clean. As such, this type of clock will need to use methods such as asynchronous rate conversion to multiply the rate down correctly. These methods invariably result in a ‘dirtier’ frequency spectrum of the clock signal, meaning that the system will be more prone to jitter.

dCS products use two oscillators, running at 2^9 of the base audio rates (44.1kHz and 48kHz), so 22.5792MHz and 24.576MHz. The easy division down to any required rate results in a cleaner clock spectrum and, as a result, less jitter.

Clock Temperature

While clock temperature is not a source of phase noise, it can affect the performance of an oscillator. The resonant frequency of a quartz crystal is inversely proportional to its size and, by extension, its temperature. As the temperature of the quartz increases, it physically expands. As the temperature decreases, it contracts. This causes changes in the resonant frequency of the quartz, as the physical size is now different. Temperature variations in digital systems should therefore be avoided, or the effects mitigated wherever possible.

There have been several methods for counteracting temperature variations inside a crystal oscillator. One approach is to use an industry-standard OCXO. An OCXO aims to remove the temperature variation of the crystal by using a Curie heating element to keep it at a stable temperature. The Curie device is a resistive heater whose resistance increases sharply when it reaches a certain temperature, effectively cutting back the heating power. The temperature will overshoot and then stabilise around the required temperature. When the temperature of the product is not stable (such as when it is powered on from cold), due to thermal delays, there will be some fluctuation in the temperature and, consequently, the frequency as the system ‘hunts’ around the target temperature. Once the crystal’s temperature has stabilised, however, the clock will output a stable frequency.

Another approach is to use a microcontroller-enhanced VCXO, as we’ve done in a number of dCS products. This approach does not use any heating elements to account for temperature variation. Instead, we utilise the large amounts of processing power available thanks to the FPGA-based design of our products to make constant adjustments to the control voltage fed to the VCXO to compensate for temperature changes.

In the case of a dCS Master Clock, such as the Rossini Clock or Vivaldi Clock, these adjustments are based on intensive measurements taken during production. During the production process, we place the clock (and the circuit board the clock is fixed to) into an Environmental Chamber. This chamber measures the clock frequency against the current controlled environmental temperature and records it onto the FPGA inside the product. The temperature is then changed, the clock measured, and the performance again logged. This process is repeated over 18 hours. This enables us to plot exactly how the VCXO in the Master Clock behaves at any given temperature, which the product has a record of.

This data is actioned in the product by adjusting the control voltage which is fed to the VCXO. A higher or lower voltage will create a higher or lower resonant frequency. This, combined with the product’s knowledge of its performance against temperature, ensures the clock’s output frequency is always stable. At any given normal operating temperature, the clock’s output frequency will be consistent.

This is a constant process inside the Rossini and Vivaldi Clocks, with the clock temperature regularly measured, and the control voltage adjusted if the clock temperature has varied. The result is that a new Vivaldi Clock, for example, can achieve an accuracy of above +/- 1 PPM when shipped. Once the clock has stabilised in its environment, the accuracy typically increases to +/- 0.1 PPM.

In the next post, we’ll look at the other main kind of jitter: interface jitter.