For a long time, I’ve wondered how useful the ‘standard’ battery of tests (THD+N/SINAD, IMD, jitter, etc.) really are for measuring a DAC’s performance. They all rely of the use of single or multiple tones, which don’t reflect all the elements that make up music and are certainly not what we listen to. I understand why humans might not be used to test new pharmaceuticals, but I don’t understand why music is not used to test audio gear.

It seems to me that the gold standard would be to compare what comes out of the DAC with what goes in… using real music. And that’s exactly what a null test attempts to do.

Null tesing is fraught with difficulty, which is probably the main reason why it isn’t used much. The main problem is in aligning the two signals (what comes out with what goes in). They need to be matched in time and in amplitude. The former is best done by syncing the DAC and ADC clocks and then aligning at the sample level. The latter requires that the RMS values of the two signals be matched as closely as possible (better than 0.001dB).

There’s a massive thread on Gearspace that attempts to use null testing to rank DAC/ADC combos, with very misleading results, unfortunately. The method is severely broken.

There is a piece of software called DeltaWave that can be downloaded for free and is by far the best null-testing tool I’ve come across.

I’ve just compared 3 DACs using DeltaWave:

- dCS Scarlatti

- RME ADI-2 Pro fs R

- Okto dac8 PRO

The RME and Okto DACs are modern DACs with state-of-the-art performance according to the standard battery of tests, and would handily beat my Scarlatti in this regard, I suspect.

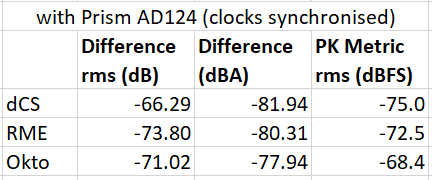

But can null testing shed any further insights? Well, here are the results of my null testing (using the same classical track as used in the Gearspace test):

In absolute terms, the RME and Okto are indeed superior to the Scarlatti - they have deeper nulls (RMS differences against the source of -73.80dB and -71.02dB respectively). However…

The RMS difference does not take into account the effects of the anti-imaging filters >20kHz, which will skew the results in favour of slow filters. The A-weighted difference does, and here the Scarlatti is superior.

The ‘PK Metric’ is a measure that the developer of the software has created, which he believes gives a better indication of the DAC’s ‘perceptual accuracy’. And again, the Scarlatti is superior.

Subjectively, the Scarlatti sounds ‘fuller’ and more laid back than the modern DACs. Null testing suggests it’s also the most perceptually accurate.

Just thought I’d share…

Mani.

.

.

.

.